Evaluation of Recommender Systems

For Rating Prediction Task

Calculate the difference between the predicted and actual ratings of an item.

Mean Absolute Error (MAE)

Root Mean Squared Error (RMSE)

Performance Measures

Accuracy

Accuracy

Accuracy (Genauigkeit)

Link to original

Precision & Recall

Precision & Recall

Wikipedia: Precision and Recall See Information Retrieval Confusion Matrix

Value of 1 is only possible for Recall, not for Precision.

… relevant is what the user likes … recommended what the RS predicts

Precision

Precision

Precision (Accuracy) is a measure for how many useful items were recommended.

→ The portion that you have suggested “correctly”. → Better for Find Good Item Tasks

Link to originalLink to originalRecall

Recall

Recall/Sensitivity (Completeness) is the fraction of relevant instances over all relevant items for that user. → 4 correctly suggested out of 5, all of which are true

Link to original

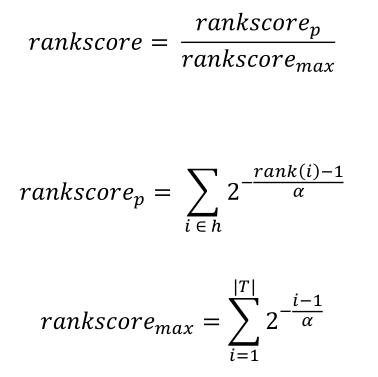

Rank Score

Rank Score

Rankscore … Hits h … sum of correctly predicted items T … Set of all relevant Items .. Rank-Half life

Link to original

Information Retrieval Confusion Matrix

Information Retrieval Confusion Matrix

True Positives … Rated Good | Actually Good False Positives … Rated Good | Actually Bad True Negatives … Rated Bad | Actually Bad False Negatives … Rated Bad | Actually Good

Link to original